Porting over old content with only slight grammar updates. Originally posted to Redox here in January 2019. Worth checking out, because the graphics are way better there.

The magic of data exchange, visualized.

Healthcare information technology is a mess of acronyms. As we approach the New Year, it's fairly common to look back on the year and do a retrospective. I thought it might be fun to do a little time traveling (unfortunately sans hot tub) further and take us back into the dark and dirty history of some of the core standards we at Redox use and love every day.

Note: René Spronk over at Ringholm has a fantastic, thorough, and well-sourced guide through the early days of HL7 that I cannot recommend reading enough. This blog post draws heavily on that work’s content.

Second note: xkcd has the perfect primer for this history. This blog post draws thematically from that work’s content.

A healthcare worker in the 1970s. Or just a guy in the 1970s. Unclear.

The year is 1979. A gallon of gas costs 89 cents. The hit song of the year is "My Sharona" and the snowboard was just invented. Several employees at Redox are still in grade school or not born yet.

More pertinently, computers are still mainframes and, thus, the dominant EHR vendors are SMS and McAuto. Connectivity at the time was limited to running a coax cable from a computer terminal on the ward to the various departmental mainframes to give users access to the various functionalities. In other words, interoperability and even the potential of exchange were bleak. The dominant vendors would charge vast sums ($100,000 - $500,000) for the simplest customized and un-reusable outputs and exchanges of data. Otherwise, manual keying of the data (human interfacing), jerry-rigged screen scraping, or capture of printer output were all rudimentary interfacing techniques.

Luckily, the International Standards Organization (ISO) chose to develop the Open Systems Interconnection (OSI) model at this point. Utilizing several layers of existing technologies, such as coaxial cables (OSI Level 1), Ethernet (OSI level 2), and TCP/IP (OSI Levels 3 and 4 respectively), it defined how modern communication functions of computing systems without regard to any underlying internal structure and technology. While this wasn't just for healthcare, it's foundational in understanding standards development, in that by partitioning a communication system into abstraction layers, it opened the door to healthcare standards built at the top layer (layer 7). Spoiler: This is where HL7 gets its numeral.

Otherwise, the 70s were pretty uneventful. ANSI X12 was founded in 1979 when a bunch of companies realized that the work that groceries were doing with EDI (yes, supermarkets are arguably the granddaddy of them all when it comes to electronic exchange) should be vendor-neutral and interoperable. Some would argue that the Transportation Data Coordinating Committee (TDCC) was first, but we'll side with the former since it's more fun to think of delicatessens pioneering this stuff than railroad moguls.

The excitement for the nascent standards growing in the 80s was palpable

Our time machine jumps forward into the 1980s. Wheel of Fortune, Sally Ride, heavy metal... the 1980s are a time of change. And just as Mount St Helens erupts, so too do the creation of standards.

X12 has started to actually become a thing. X12 standards are published for the first time in 1981 and encompass the transportation, food, drug, warehouse, and banking industries. With there still being a premium on file size, X12 (and many other early EDI standards) use delimited formats that were compact and well-defined.

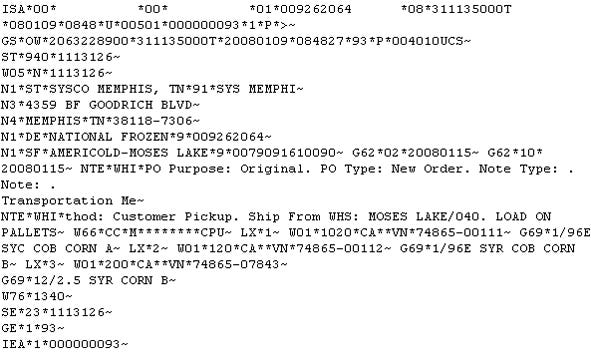

Delimited by asterisks and tildes. Would set the stylistic precedent for years to come.

Big suppliers of the time (kids, do you remember Kmart?) demand EDI integrations, although value-add networks (the forefathers of today's X12 clearinghouses) are needed to facilitate the exchange, acting as necessary data brokers to connect disparate networks in the pre-Internet era. Messages would be transported over dial-up or, for large users, leased line connection, to a value-added network (VAN), using proprietary protocols.

The United Nations is impressed by the success of X12 (apparently) and decides to make its own derivative format. EDIFACT is born:

Pretty much the same as X12, but delimited by plus signs. #innovation

Meanwhile, healthcare started to get in on the fun. ASTM (American Society of Testing and Materials) defined the first set of standards, messaging between a lab system and instruments. It again draws inspiration from X12 and acts as a competitor for years to HL7:

Comma-delimitation was cutting edge standards tech at the time.

In 1986, work begins on Medix (IEEE P1157 Medical Data Interchange), a name/value pair oriented method of exchange. Created by researchers and highly theoretical in nature, it never really gains traction but inspires some big brains later in the 2000s with its information model-driven approach.

However, over on the West Coast, in a beautiful quaint bayside town called San Francisco, the University of California at San Francisco (UCSF) Medical Center—under the direction of their CIO Donald W. Simborg - begins the first steps to create a more comprehensive standard. As the industry faces a proliferation of systems at the departmental level with the growth of microprocessors over mainframes, UCSF pioneers the first network in 1981, with four minicomputers connected to exchange transactions between the UCSF registration systems, clinical laboratory, outpatient pharmacy, and radiology systems. In contrast to the modern system-to-system modes of communication popular today (push and query), this first network took a full broadcast approach, with each party sending all updates to all systems.

Simborg sees the success at UCSF and, as one does in San Francisco, makes a startup to commercialize this technology in 1984. By 1985, his company decides to make their proprietary technology public domain in the hopes it would be adopted as a standard across the industry, buoying their struggling company. Four of the hospitals using STATLan, their product, join the first meeting to refine the standard. Having created a non-proprietary OSI Level 7 healthcare language protocol, this would represent the first Health Level 7 meeting. HL7v1 (!) is born in October 1987:

Backslash delimited...inching closer to the beauty of pipe delimitation.

The rest of the 80s sees a push in the marketing of the nascent standard, pushing it as a "puzzle piece" or "turn-key solution" and claiming a reduction of costs from around $100,000 to $10,000 for HL7 interfaces. Faced with this perceived threat, many major vendors, such as SMS and Sunquest, join HL7 to attempt to monitor or, more nefariously, perhaps influence the organization. Aiming to debunk the perceived ease and cheapness of setting up interfaces using HL7 as opposed to devising custom solutions, ironically their presence in the organization only solidifies it in the industry further. Marketing considerations even influence the naming of the standard, as the organization "upgrades" to version 2 with few major differences. In the words of a major HL7 contributor, Wes Rishel: "The decision to skip to a new major version number was informal and based on the concept that it made the standard sound more mature rather than any deep discussion of family characteristics of versions". Much can be learned about the power of informal branding here.

Some of the OG HL7 team. Titans of tech. Colossus of clout. Sultans of standards.

In the face of this growth, HL7's competitors melted away. ASTM sees their lab niche as defensible but tries to remain in sync with HL7 by holding workgroup meetings in the same place, then by joining their meetings, and finally by deprecating their standard. Medix loses the marketing battle by focusing on data architects and abstract thinkers rather than clinical users and vendors. Additionally, it loses the data formatting war, as delimited standards take root, and name/value pairs are cast to the wayside in healthcare for years (aside from DICOM and a random German first-line care standard).

While they somewhat failed compared to HL7, these early standards contributors do much to influence the path of the industry, including HL7's formatting (similar to ASTM) and later structure (HL7 chose to make their next standard, HL7v3, and its Reference Information Model, similarly theoretical like Medix). In particular, this quote by ASTM and later HL7’s Clement MacDonald still rings true years later and represent one of the most metaphoric takes on interfacing you'll find anywhere:

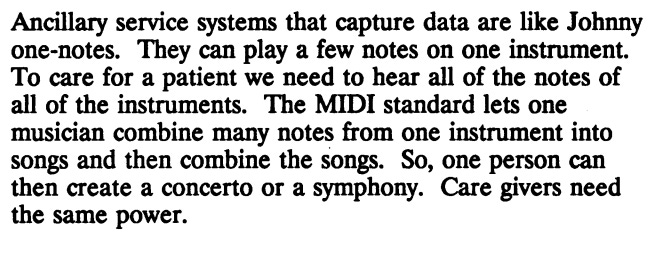

Absolute 🔥

Truer words never have been spoken (well, maybe, but at least in terms of HIT integration)

Our time machine reaches the 90s. We're talking about Rugrats and Doug. We're talking about the fall of the Soviet Union and the associated dissolution of socialism in Eastern Europe leading to various new independent states. We're talking about the release of the ultimate Christmas song of all time with long-lasting cultural impacts, Mariah Carey's "All I Want for Christmas is You". We're talking about presidential scandals that in retrospect look remarkably tame and civil. Most importantly, we're talking about the proliferation of personal computing.

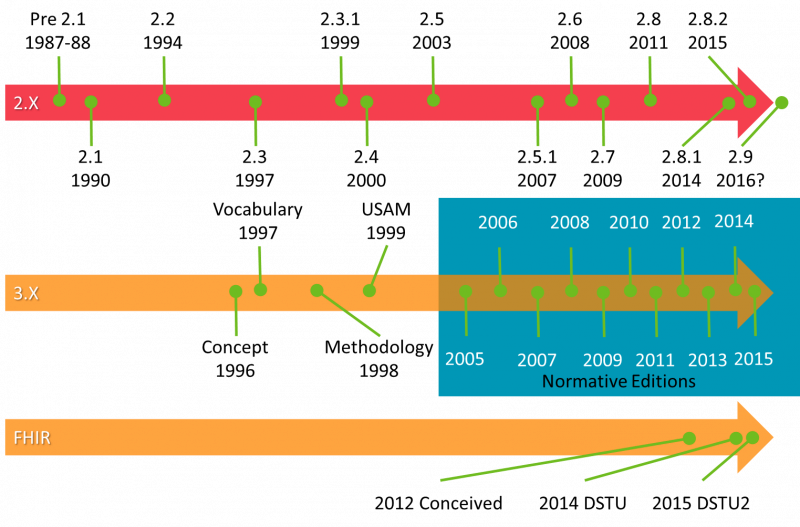

HL7’s timeline. FHIR now has a normative edition too (not pictured).

Personal computers mean all users in hospitals now have access to software, which means a growth in departmental and further specialized programs. As a result, there is the need for data exchange between them, which (with HL7 entrenched now in the industry) meant a boom in HL7's reach and power. They start revising more rapidly, moving from 2.1 to 2.4 (largely considered the definitive and prevalent version of HL7 even today aside from some use cases for 2.5.1) during the decade. Hospitals were now requiring vendors to support HL7 to make sales, which cemented it in its position today. The majority of Redox’s Data Models look back at least partially to this decade for their history.

Given the growth around HL7 in this decade, the technologies available at the time were used for security and transport. In the pre-Internet boom era, TCP/IP (through MLLP, Minimum Lower Layer Protocol) was defined as the suggested connective tissue, with VPNs (using IPsec) as the security mechanism where needed. However, almost all software was on-premise, as the idea of an application being hosted remotely was laughable due to the slowness of connections at the time. As a result, this communication protocol legacy still lives with us today.

At this point, you may be thinking "What about DICOM? It's still around! It's my favorite standard but you haven't mentioned it." On the other hand, you probably aren't thinking that, unless you’re very into medical imaging and modalities, but a great question nonetheless, reader. With the introduction of computed tomography (CT) and other digital diagnostic imaging modalities in the 1970s, and the increasing use of computers in clinical applications, a bunch of smart radiologists (namely, the American College of Radiology and the National Electrical Manufacturers Association) saw a need to get those images out of the devices and into centralized archives, so they started work on an ACR-NEMA standard. This would hang around for a while, but only became DICOM in 1993 when they tore up most of what they'd written and started over again on the third version. Given its prehistoric origins, even though it was released in the 1990s, it is somewhat archaic in format, the exact electronic equivalent of magnetic tapes, a name/value format also relying explicitly on TCP/IP as a communication method. It had little influence on standards outside of imaging departments, as it is particularly poorly suited in the Internet-enabled world and the community is struggling to define its future for image interoperability between hospitals.

Similarly, the National Council for Prescription Drug Programs (NCPDP) was founded in 1976 as a consortium of retail pharmacies, benefit managers/insurers, and industry vendors, hanging around to maintain a list of pharmacies and NDC (National Drug Codes) changes. They finally make a standard (SCRIPT) relevant to the healthcare world in 1997, which (surprise) is based off on X12 and HL7. It defined e-prescribing transactions between provider and pharmacy, as well as medication history transactions between provider and pharmacy benefit managers.

You can tell it's NCPDP that made this image by how cool the pharmacist looks in it.

Lastly, towards the end of the decade, professionals from both the medical imaging and healthcare information technology domains get together to address what they see as the technical "Tower of Babel", in that disparate devices lack a common means of communication (sound familiar?). This self-described movement/initiative/framework repeatedly states that they are not interested in creating new standards, but defining the right way for actors within health IT to interact, which they call “integration profiles” (but which could also be described as “standards of standards”, “super-standards”, or “more rules”). Creating at first seven radiology focused workflow profiles based on existing content standards like HL7 and DICOM, Integrating the Healthcare Enterprise (IHE) is born in 1998.

Iconic. Bring back jean everything

We jump forward again and, honestly, I'm not sure really what's happening in the 2000s. Notably, on the geopolitical front, 9/11 happens, influencing world affairs for years to come, and late in the decade, the US elects its first African American president. However, sandwiched between the 1990s and 2010s, it's highly possible we'll eventually compress the bookend economic collapses (Dotcom and Financial) into one event and entirely forget this strange decade of people wearing baggy clothing with dumbphones even occurred when compared with the thrills of 90s kid life and the unprecedented highs/lows of our current decade.

In terms of standards, however, the 2000s show us the rise of the Internet and its influence on the standards of the time. XML (created or codified in 2000) is developed to represent data in a human-readable way, cleaner and sexier than cluttered delimited formats. HTTPS, formally specified in 2000, offers a secure and Internet-based alternative to TCP/IP or FTP. SSL and TLS (released in 1999) offered new exciting ways to secure connections of all types. Standards, formerly faxed when HL7 members called in requests, can now be listed online.

The 2000s, therefore, represented an era of experimentation, where standards tried the hottest new acronyms as quickly as they could. XML, SOAP, and SOA (service-oriented architecture) dominate the decade. EDIFACT dabbled with XML/EDIFACT, scientists mocked up a DICOM XML version and released DICOMweb, and the Organization for the Advancement of Structured Information Standards (OASIS) was created "to define an XML framework for exchanging authentication and authorization information", creating the Security Assertion Markup Language (SAML) that drives our SSO Data Model. Even NCPDP, relatively new on the standards scene, created SCRIPT XML and began to put in place requirements to move to the format (NCPDP 10.6). As mentioned above, HTTP becomes the comm method du jour to transport standards' payloads, with TLS (a secure successor to SSL) encrypting the information.

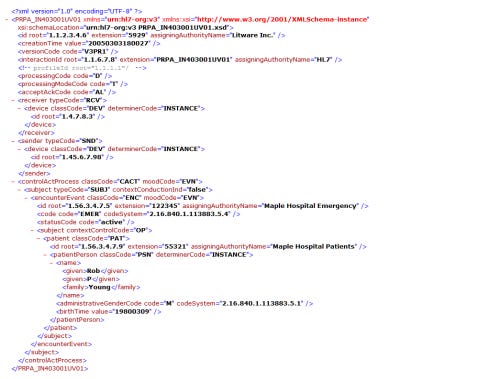

HL7 was not to be left out of the party. High off the success of HL7v2 in the 90s, the workgroup committees latch onto these technologies and march westward towards a metaphoric Stalingrad with HL7v3, an ill-fated XML endeavor meant to put semantic structure where HL7v2 had flexibility.

Better to read than delimited formats, unless you like asterisks or pipes

Designed by information architects rather than implementers, many view HL7v3 as a failure. Summarizing a few of those opinions, HL7v3 struggled because:

Vendors never saw the reason to spend development hours to switch for use cases that were already working, given the investment into software and specialized knowledge.

In providing consistency, V3 lacked the flexibility required for actual operations and implementation.

Expansive, detailed specifications required experts to understand the Reference Information Model (RIM) and use the supported tools. It got expensive. Very expensive.

While they chose HTTPS as the transport mechanism, they also added a bunch of additional wrappers (SOAP and ebXML) that made an already complex situation unmanageable. In terms of command security standards, MLLP and VPN are like yelling your message across a room with really soundproof walls, whereas ebXML/SOAP/HTTPS is like having your main message translated into Chinese, wrapping it in Esperanto, and sending it over in binary. Overly complex and a lot of arcane knowledge.

As a result, it is used only in a handful of use cases that requires tightly structured data and no implementation-specific variation, such as reporting to statewide or national registries (looking at you, the Netherlands). Largely speaking, though, base HL7v3 was a lot of theory, a lot of work, and a lot of money for no reason. It was a classic case of smart people making dumb things (or the wrong thing) and speaks testaments to zoning in on needs from the user/implementer/customer perspective (a fundamental perspective we here at Redox talk a lot about). But the next decade would be built upon these lost years, the crumpled remains of countless brackets, information modeling and conformance claims serving as a foundation for not one, but two drastic interoperability successes.

HL7's struggles were not limited to just HL7v3, though. A Cold War of sorts was brewing between the standards body and NCPDP, as the former dominated inpatient medication exchange with its HL7 RDE transaction, whereas NCPDP had begun to see widespread adoption of its SCRIPT standard through Surescripts (a company founded by pharmacies to facilitate e-prescribing) and RXHub (a company founded by pharmacy benefit managers to facilitate medication history exchange). The decade saw much work to map HL7 to NCPDP, but tensions ran high, as mapping and implementation were difficult. As the 2000s drew to a close, Surescripts and RxHub merged (somewhat surprisingly, since benefit managers and pharmacies are traditionally rivals due to differing incentives), solidifying the new Surescripts Network as the sole middleman to conduct all exchange. With the government giving backing to NCPDP as the "official" standard, Surescripts flexed in a big way with SCRIPT 10.6, requiring EHRs to migrate fully if they wanted to integrate with the network.

IHE, on the other hand, had fun in the 2000s. With the success of their seven original integration profiles (aimed at defining radiology workflows using HL7 and DICOM foundations), they expanded their reach, tackling security and infrastructure standards in 2003 and then many correlated areas (domains) addressing specific problems in healthcare:

Lab in 2003

Patient Care Coordination in 2005

Radiation Oncology in 2005

Anatomic pathology in 2006

Cardiology in 2006

Quality, Research and Public Health (QRPH) in 2007

Patient Care Device in 2008

Pharmacy in 2009

Eyecare in 2009

In doing so, however, IHE begins to drift from its original stance as solely a workflow defining, but not standards creating body. With QRPH, for instance, IHE defines the RPE transaction, a new message intended for use between EHRs and Clinical Trials Management Systems.

Another important event of note (perhaps more importantly) is the creation of SOAP-based Cross-Enterprise Document Sharing (XDS) and Cross-Enterprise Document Reliable Exchange (XDR) profiles within the IT Infrastructure domain. While it would take most of the decade to sort out the correct technical layers that would be used to support these profiles (leading to the relaunch of XDS with more prevalent technologies being called XDS.b) and to create related profiles such as Patient Demographics Query (PDQ) and Cross Community Patient Discovery (XCPD), these profiles would be foundational in the coming decade for enterprise interoperability and prove to be one of IHE’s biggest successes behind perhaps only their initial radiology profiles.

Just about the best 2010s decade photo, I could find

Welcome back to the present. A lot of movement in recently, so let me try to summarize:

Mobile. FAANG. IoT. Refugee crisis. MCU. Artificial intelligence. EDM. Border wall. Miley Cyrus descent into insanity and back. Blockchain. Golden State and Patriots dynasties. Commoditization. Trade war. Russian intrigue. Cardi B. Cloud. DISRUPTION.

It's been a series of ups and downs, unmitigated economic progress, and previously unfathomable socio-political crises punctuated regularly by truly thrilling leaps forward in how we interact with technology in our everyday lives.

Fresh off its lost decade, HL7 has its first rebound success with the Consolidated Clinical Document Architecture (C-CDA) format. This format combined the structure of HL7v3 with human-readable sections, allowing simpler vendors to just show the data to users and advanced vendors to process this rich data summarizing patient's care (allergies, meds, problems, and much more) in more complex ways. Side note: Nick Hatt here at Redox has an enlightening summary about C-CDAs for those unfamiliar with C-CDA that is worth reading as a primer to the next few paragraphs.

In the hectic years of the 2000s, HL7 again refactored ASTM content (yep, they're still around), taking their Continuity of Care Record and adapting it with the HL7 Clinical Document Architecture (CDA) specifications to create the CCD (Continuity of Care Document) standard. This initial pass at the standard was not well-defined and lacked examples, leading to divergent implementations by vendors. Meaningful Use 1 did nothing to close the gap, allowing EHR vendors to either use a CCR or a CCD. However, Meaningful Use 2 (in 2010) called out the use of the CDA standard in general, and in particular, the use of C-CDA documents for data exchange. By requiring that healthcare providers use C-CDA document exchange regularly in care transitions, the competing standards issue was put to rest.

Also notably, this legislation would propel forward the use of IHE’s aforementioned XDS technologies, encouraging EHR vendors in some ways to adopt that transport technology for query-based exchange. Unfortunately for IHE, when it came to pushing transactions, while MU2 encouraged the use of XDR as an option, it mandated the use of Direct messaging, an alternative transport method. As a result, EHR vendors chose to develop support for the latter in most cases.

All jokes aside, C-CDA was also successful because it finally solved a problem in healthcare that didn't already have a solution. Most of HL7v3 was so caught up in re-inventing the wheel and fixing HL7v2's points of weaknesses, rather than looking at where standards had not yet offered a solution to a problem. C-CDA is now the foundation for the exchange of a patient during a transition of care between organizations, something that did not exist before. Millions of faxes a year are replaced with this type of exchange and there's an increasing focus on using the content-rich data in new and exciting ways, such as a more comprehensive patient Longitudinal Plan of Care, better data reconciliation, or advanced analytics. Additionally, for Redox's purposes, C-CDA is the well-deployed foundation of our ClinicalSummary Data Model, something our customers can use broadly not only to get allergy, medication, and diagnosis information, but a variety of other data types.

The advent of mobile and cloud technologies has led to a large scale shift from SOAP with XML payloads to REST with JSON payloads for vendor APIs. Vendors in other industries, like Apple, Salesforce, and Google, see success in offering developers paid experiences and dedicated marketplaces to showcase their creations (app ecosystems). On the architecture side, SOA has been replaced with microservices.

My colleague Nick Hatt already has put out a lot of content regarding FHIR and I highly suggest looking at any of those resources. But to summarize, a really smart Australian (Grahame Grieve) saw HL7v3's failings and proposed a new way of interacting that used the best technologies of this decade (REST, APIs, etc). Moreover and most importantly, like CDA, this new technology solved new problems that didn't have standards-based solutions (in this case, query-based workflows for edge systems). Remarkably, the resources and documentation are entirely available without sign-ups or fees, which is a drastic change from the HL7 of yesteryear with the faxing of standards. The excitement around FHIR (a metaphoric HL7v4) is palpable in the industry.

This is a meme. Memes became popular in the 2010s.

NCPDP and X12 have been evolutionary rather than revolutionary this decade. They're still plugging away revising their standards and adding new transactions, which (assuming industry adoption happens) is ultimately good for the patient in terms of offering new functionalities, like transfer of prescriptions from pharmacy to pharmacy.

In this decade IHE has continued its expansion into other healthcare domains:

Dental in 2012

Endoscopy in 2013

Surgery in 2015

IHE has had a few misses in the past decade. Taking the lead on important healthcare problems like provider directories, clinical decision support, and mobile development in the healthcare space show that IHE could identify the biggest problems, but the adoption of the created standards (HPD, GAO and MHD 2014 version) has been slow and now looks to be largely superseded or replaced by FHIR alternatives.

As such, IHE is finding its new identity in the FHIR world. With many profiles firmly entrenched in XDS technologies, there’s some threat to their status quo, in that rewriting of those profiles may be necessary in order to stay relevant and accurate to what’s occurring in the real world. For example, if enterprise interoperability shifts from XDS.b technologies to FHIR underpinnings, IHE will need to accordingly keep their profiles in sync. Thus far, they seem up to the challenge, but it’s an interesting change from the previous decade, where IHE profiles drove standards adoption, rather than standards adoption driving IHE profiles. Also, it will be interesting to see whether IHE will maintain duplicate profiles (i.e. an XDS version for B2B and a FHIR version for “mobile”) for all their transactions.

So what will the future bring? What's next for standards? A few guesses:

Images: DICOM will be a big mover in the coming years. The desire to send images and image-related data across organizations will only grow now that C-CDA interoperability is fairly well deployed. It will be interesting to see what consensus the industry comes to in regards to standards or whether a single player (a la Surescripts) will arise to dominate that conversation. It’s definitely a bit contentious already:

NCPDP: Given that their standards are overwhelmingly used case-specific enterprise interoperability (sending and receiving between institutions) and that their standards' content is increasing including patient clinical information that's not just a medication (like height/weight, allergies, etc), a shift to a CDA-like format might make sense, keeping it in line with the C-CDA used for transfers of care and allowing for the benefits of free text versions of the encoded information. Or will they skip that step and do a FHIR/API derivative?

Decision Support: CDS Hooks is a relatively new standard that's going to heat up big time. Given the recent focus on AI and machine learning, the demand for real-time feedback into end-user workflows will likely grow, as it’s the most straightforward application of such technologies. Looking forward, expect other action points like note signing (or even draft saving), the addition of problems to the patient's chart, or more mundane (but very important) administrative tasks for schedulers, registration staff, or back-office users (Registration Decision Support? Admin Decision Support?) to hook into centralized, cloud-based best-practice repositories. HL7 will want to manage those standards, as will other standards bodies and vendors.

API Growth: It will be interesting to see how HL7 and other standards bodies react as they lose some control with the growth of app ecosystems offering easy-to-use, but non-standard functionalities. Understanding of new web technologies is going to increase across the industry. FHIR will continue to grow rapidly, but regular/slow versioning will be important if vendors can keep up. The recent normative version is a big step for reducing the development burden for vendors. Developers are seeking high-quality development environments offering crystal clear documentation and superlative support to help them create new, exciting things. All of this means integration gaps that standards haven't filled will be filled in different ways by different EHR vendors as they expand their API programs.

Continued Need for Abstraction and Simplification: You thought you’d get away without a pitch, didn’t you? 😉The challenge for vendors involved in digital health only increases in this new and exciting era. As the history of standards in healthcare grows (and even more so as the API ecosystem era begins), Redox continues to make this industry’s integration legacy simple and easy to use by abstracting away this tangled history and dozens of acronyms to our API. No matter what proliferation of standards occur, Redox remains committed to fully supporting all communication methods, all content standards, and all security mechanisms to provide a consistent, standardized, and scalable experience for developers.

Don’t be this guy.

Other thoughts:

This is intended as a slightly humorous and non-rigorous account of the history of healthcare technology. I apologize for any offense, as none is intended.

It is largely possible I may be slightly mistaken on different areas discussed here. If there are errors, please contact me and I am happy to update/correct.

A few additional sources:

IHE Primers from the early 2000s. All are worthwhile to peruse for some additional perspective, but Part 4 has some premium healthcare tech metaphors. Part 6 alone is worth the read for the most ambitious crossover event in history (Tolkein meets radiology):

Nice perspective on the late 2000s view of enterprise image exchange

FHIR post (but actually learned a lot, great work!)